TL;DR:

• Google Messages deploys AI nudity detection to all Android users after months of testing

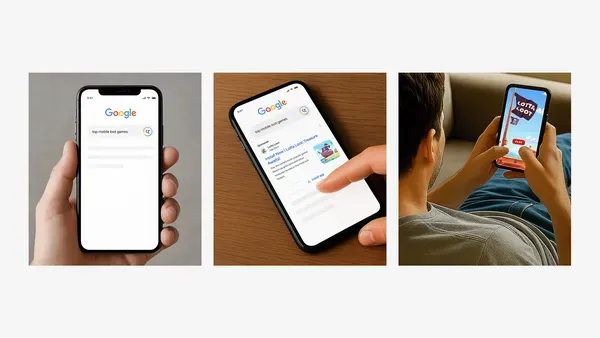

• Feature automatically blurs explicit images and warns senders about risks before sending

• Enabled by default for teen accounts, optional for adults requiring Google Account sign-in

• Marks Google's broader push into AI-powered content moderation across messaging platforms

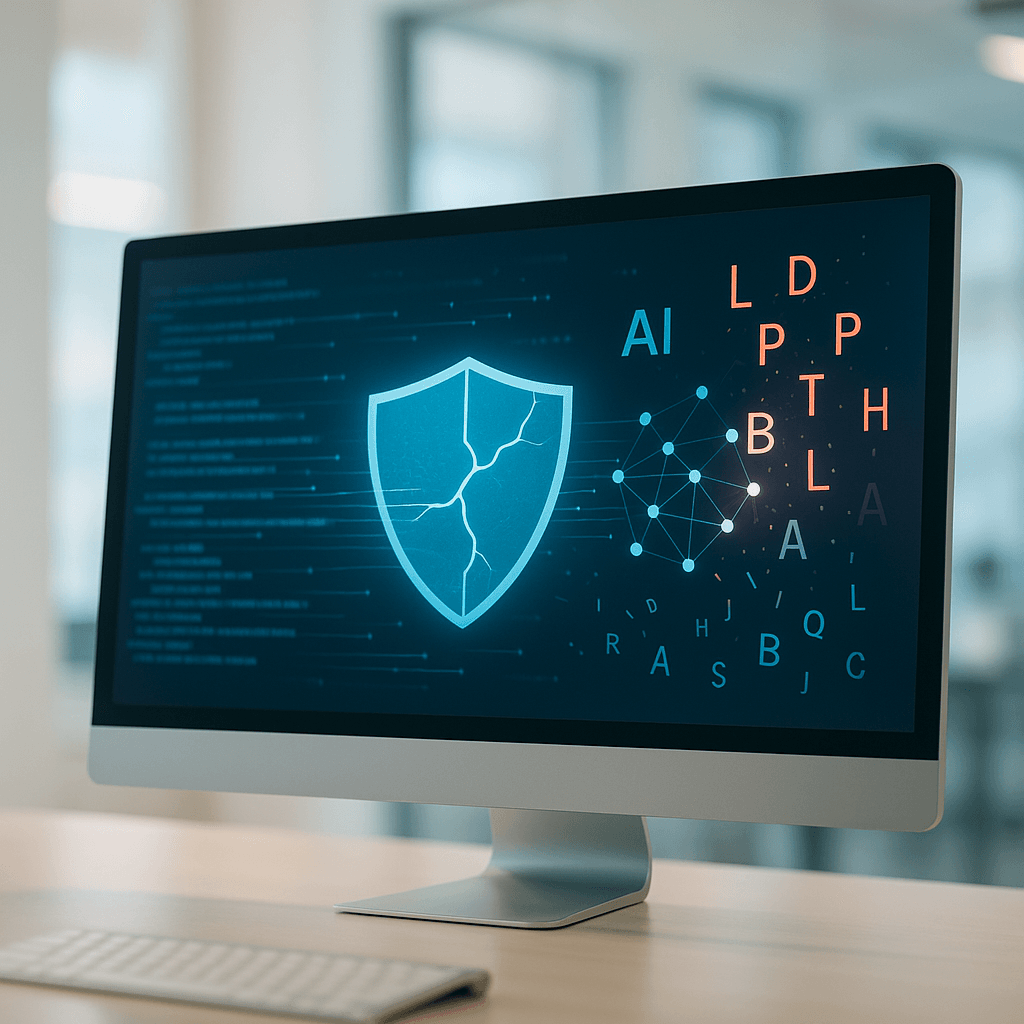

Google is finally rolling out its AI-powered Sensitive Content Warnings feature to all Android users after 10 months of beta testing. The machine learning system automatically detects and blurs nude images in Google Messages, giving recipients control over whether to view, delete, or block unwanted content without exposure.

Google just activated one of its most significant AI-powered safety features across the entire Android ecosystem. The company's Sensitive Content Warnings system is now live for all Google Messages users, automatically detecting and blurring nude images before they reach recipients' screens.

The rollout represents a major milestone in AI-driven content moderation, with Google's machine learning algorithms now processing millions of daily messages to identify explicit content. According to 9to5Google's reporting, the feature gives users immediate options to delete blurred images, block senders, or report inappropriate content without ever viewing the actual image.

What makes this deployment particularly notable is Google's dual-sided approach to prevention. The system doesn't just protect recipients—it actively intervenes when users attempt to send nude images. Senders now encounter warning messages explaining the risks of sharing explicit content and must deliberately swipe right to confirm transmission, creating a friction point that could reduce impulsive sharing.

Google first announced the Sensitive Content Warnings feature in October 2024, positioning it as part of a broader safety initiative targeting harassment and unwanted explicit content. The 10-month beta period suggests Google was fine-tuning the AI's accuracy to minimize false positives while ensuring genuine nudity detection remained robust.

The feature's architecture reveals Google's strategic privacy positioning. Unlike cloud-based content scanning, the nudity detection happens on-device, requiring users to sign into their Google Account but processing images locally. This approach addresses privacy concerns while still enabling the safety benefits of AI moderation.