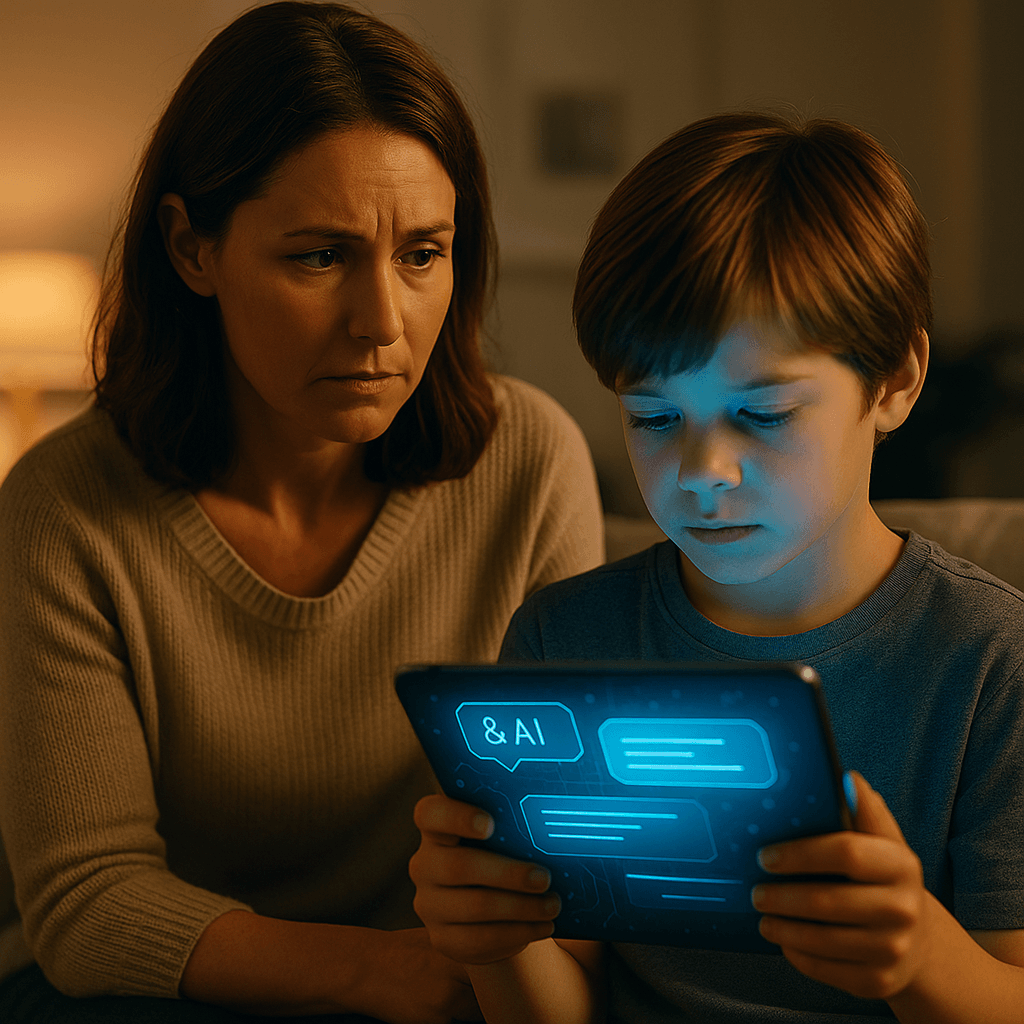

Texas Attorney General Ken Paxton has launched a sweeping investigation into Meta and Character.AI for allegedly marketing AI chatbots as mental health tools to children without proper credentials or oversight. The probe comes as federal pressure mounts over AI safety, with both companies now facing civil investigative demands that could reshape how tech giants handle vulnerable young users.

Meta and Character.AI are scrambling to respond as Texas Attorney General Ken Paxton escalates the fight over AI chatbots targeting children. The investigation, announced Monday, accuses both companies of "potentially engaging in deceptive trade practices and misleadingly marketing themselves as mental health tools" without proper medical credentials.

The timing couldn't be worse for the tech giants. Just days earlier, Senator Josh Hawley launched his own investigation into Meta after reports surfaced that its AI chatbots were flirting with children. Now Paxton is digging deeper into the business model itself, targeting how these companies monetize vulnerable young users.

"By posing as sources of emotional support, AI platforms can mislead vulnerable users, especially children, into believing they're receiving legitimate mental health care," Paxton stated in the official press release. "In reality, they're often being fed recycled, generic responses engineered to align with harvested personal data and disguised as therapeutic advice."

The investigation centers on how both platforms allow AI personas to masquerade as professional therapeutic tools. On Character.AI, millions of user-created bots include a highly popular "Psychologist" character that has attracted significant usage among young users according to BBC reporting. Meta doesn't explicitly offer therapy bots for children, but the company's AI Studio allows third-party creators to build therapeutic personas that minors can access.