AI Agents & Memory

AI Agents & Memory

Hey Tech Buzzer,

After reading this email, you will understand memory in the context of AI. This open-source YC company has been soaring.

As a customer, I can tell you they’ve found a simplistic solution to one of the biggest… no, the biggest problem with AI agents today.

Memory. Done right. With 3 lines of code, that makes every AI agent orders of magnitude better. Allows it to learn and evolve over time.

Coders, this is for you. If you aren’t an engineer, I’ll bet your company is building agents. Forward this to whoever you know building agents.

It’s free, so simple, and unbelievably good.

-Prerit

Meet Mem0.

The AI Memory Crisis: The #1 reason users abandon your AI app

Why AI Needs Memory

Poor memory is probably the #1 thing killing user engagement in your AI application.

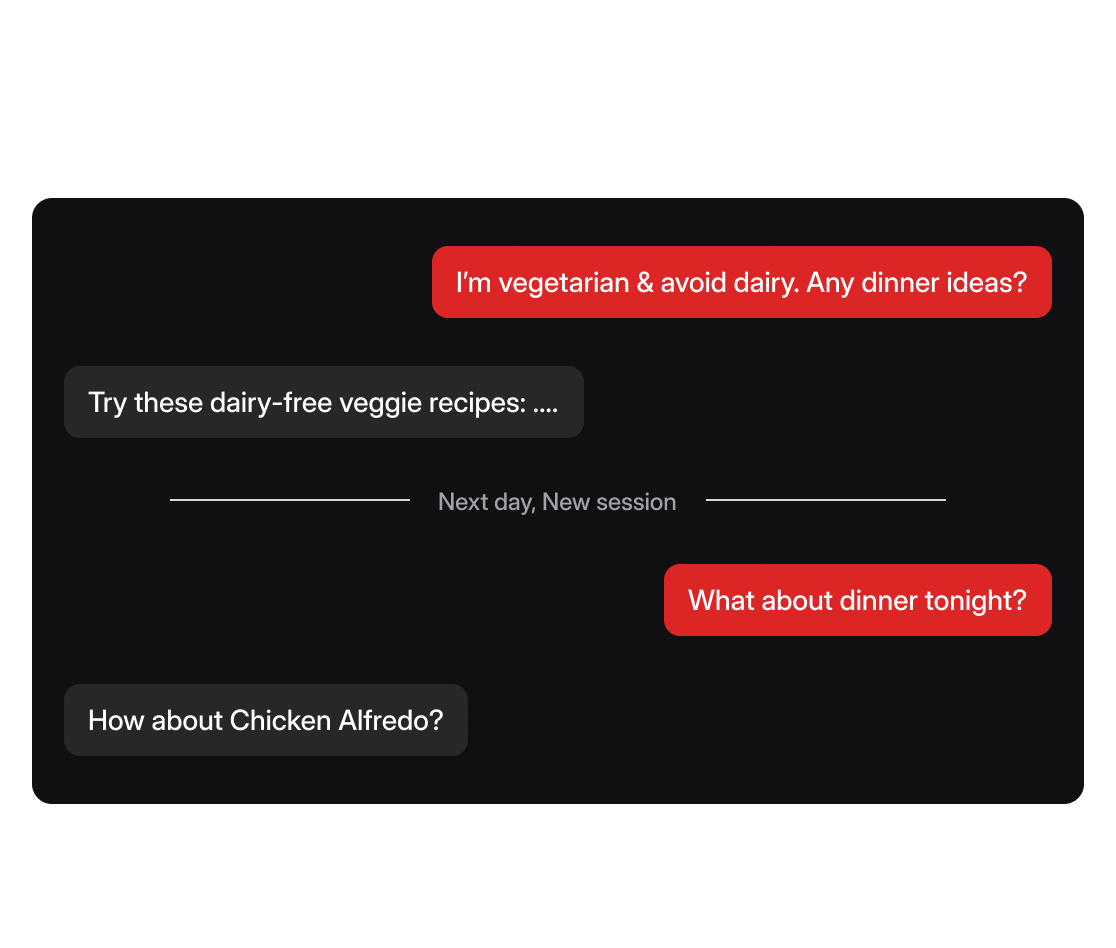

You've built a great AI app, but here's the problem: users have to repeat themselves every time they start a new session

LLMs are powerful, but stateless by design. They don't remember previous interactions, user preferences, or evolving context. Each conversation starts from zero unless you rebuild context yourself.

To solve this problem, developers try cramming entire histories into the context window which causes a cascade of problems:

Exponential API cost increases as conversations grow longer

Higher latency from processing massive context

Quality degradation as models struggle with information overload

Hallucinations from irrelevant historical data

Why Memory Changes Everything

Memory transforms AI from a sophisticated search engine into something that feels genuinely intelligent and personal. It simulates the feeling of the LLM learning and growing with the user making the interaction much more powerful and personal.

Major AI companies recognize this. OpenAI, Anthropic (Claude), Google (Gemini), and Microsoft (Copilot) have all shipped memory features for their consumer products. Because of this, users engage more deeply with AI that remembers their preferences, context, and ongoing projects.

What Developers Are Building with Smart Memory

Persistent memory is being used in creative ways:

Customer support bots remember product configs and history, cutting resolution times by 40%

Learning apps track knowledge gaps and adjust lessons, boosting completion rates by 60%

Personal assistants maintain long-term context for complex workflows

What Mem0 Adds

Mem0 is an open-source framework that gives your AI apps intelligent, long-term memory. Rather than just storing raw conversation logs, Mem0 automatically extracts and organizes meaningful facts, preferences, and context.

Key capabilities:

Intelligent extraction: Automatically identifies what's worth remembering from conversations

Semantic storage: Uses vector embeddings to store and retrieve contextually relevant memories

Adaptive updates: Learns when to update existing memories vs. creating new ones

Multi-user support: Maintains separate memory spaces for different users and contexts

Simple API: Add memory with just a few lines of code

In short, they’re building stateful systems that feel adaptive, personal, and context-aware.

Mem0 has quickly scaled to 39,000+ GitHub stars and 4M+ downloads, becoming developers' go-to memory solution because it handles the complexity while keeping implementation simple.

Tech Buzz Founders Get 6 Months Free Pro Plan

🎁 Use code: STARTUPPROGRAM2025

Sample repositories and integration tutorials

Priority support for implementation questions

Direct access to the Mem0 team for technical guidance

Try It Yourself

Here’s how simple it is to add memory with Mem0:

from mem0 import Memory

memory = Memory()

# Store user info

memory.add("My name is Hassan and I work in growth at Mem0", user_id="user1")

# Retrieve it later

print(memory.get(user_id="user1"))

That’s it. Just a few lines of code, and your app remembers. 🧠

Start building apps that remember — try out Mem0 today.

The Tech Buzz

| Get the daily newsletter that helps you understand the tech ecosystem sent to your inbox.

By submitting your email, you agree to our Terms and Privacy Notice.

More Newsletter Posts