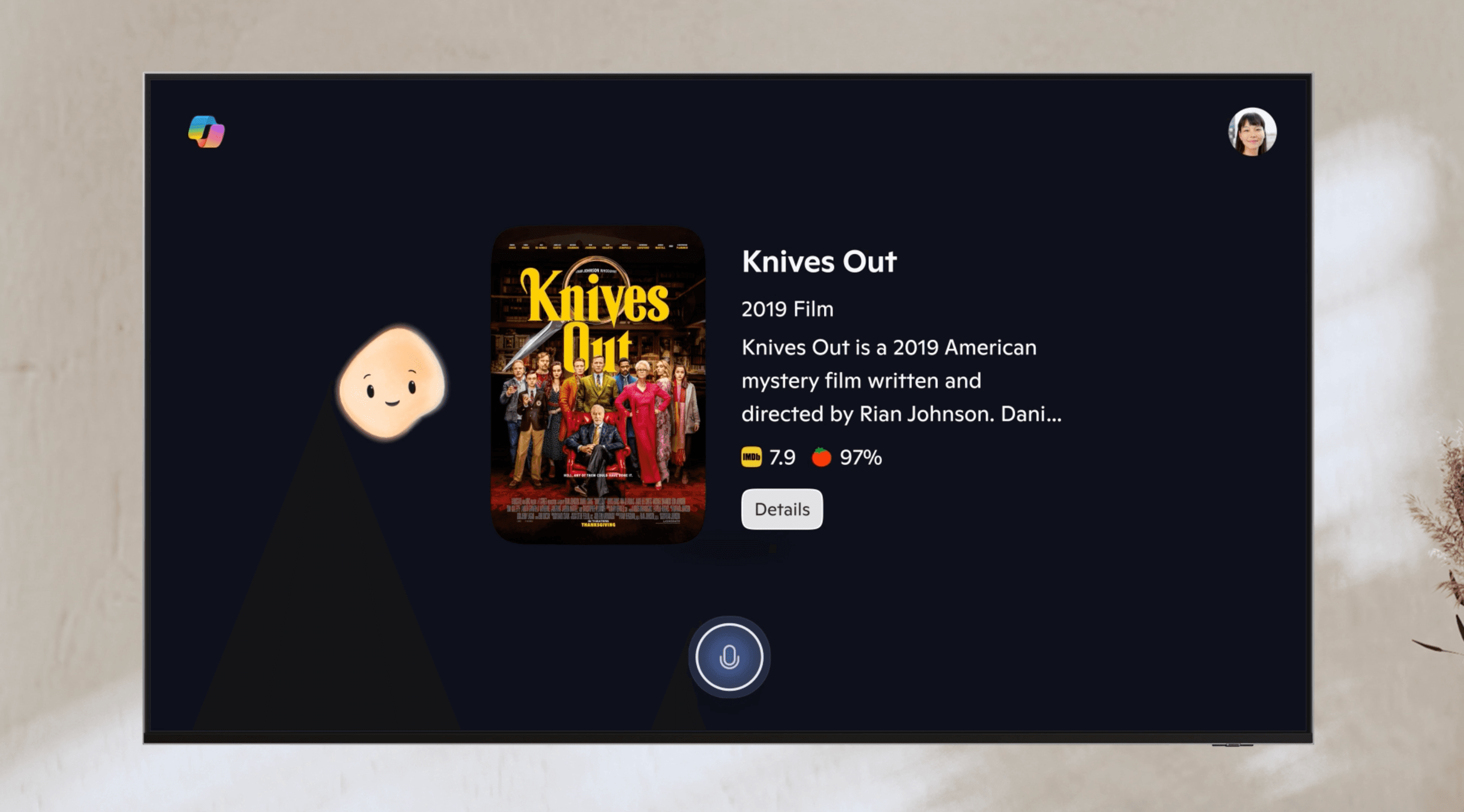

Google just dropped what could be the game-changer in AI image editing. The company's new Gemini app feature integrates the world's top-rated image editing model from Google DeepMind, promising to maintain character likeness while transforming photos in ways that were previously impossible. This isn't just another filter upgrade—it's a fundamental shift in how we interact with our digital memories.

Google is making a bold play in the AI image editing wars. The tech giant just announced that its Gemini app now features what independent testing shows is the world's highest-rated image editing model, developed by Google DeepMind. The timing couldn't be more strategic—as competitors like Meta and Adobe battle for creative AI dominance, Google is betting that character consistency will be the differentiating factor.

"People have been going bananas over it already in early previews," writes David Sharon, Multimodal Generation Lead for Gemini Apps, in today's announcement. The enthusiasm appears justified—early users report that the model finally solves the uncanny valley problem that has plagued AI image editing since its inception.

The breakthrough centers on what Google calls "character consistency"—the ability to maintain a person's or pet's likeness across dramatic transformations. Unlike previous AI editors that often produced results that were "close but not quite the same," this model preserves the essential features that make someone recognizable, whether they're being transformed into a 1960s beehive-wearing time traveler or their chihuahua is getting a tutu makeover.

Google's timing reflects broader industry trends. Meta has been aggressively pushing AI-generated content across its platforms, while Adobe continues expanding its Firefly capabilities. But Google's approach differs fundamentally—rather than creating standalone tools, they're integrating advanced editing directly into their conversational interface, making complex edits as simple as describing what you want.

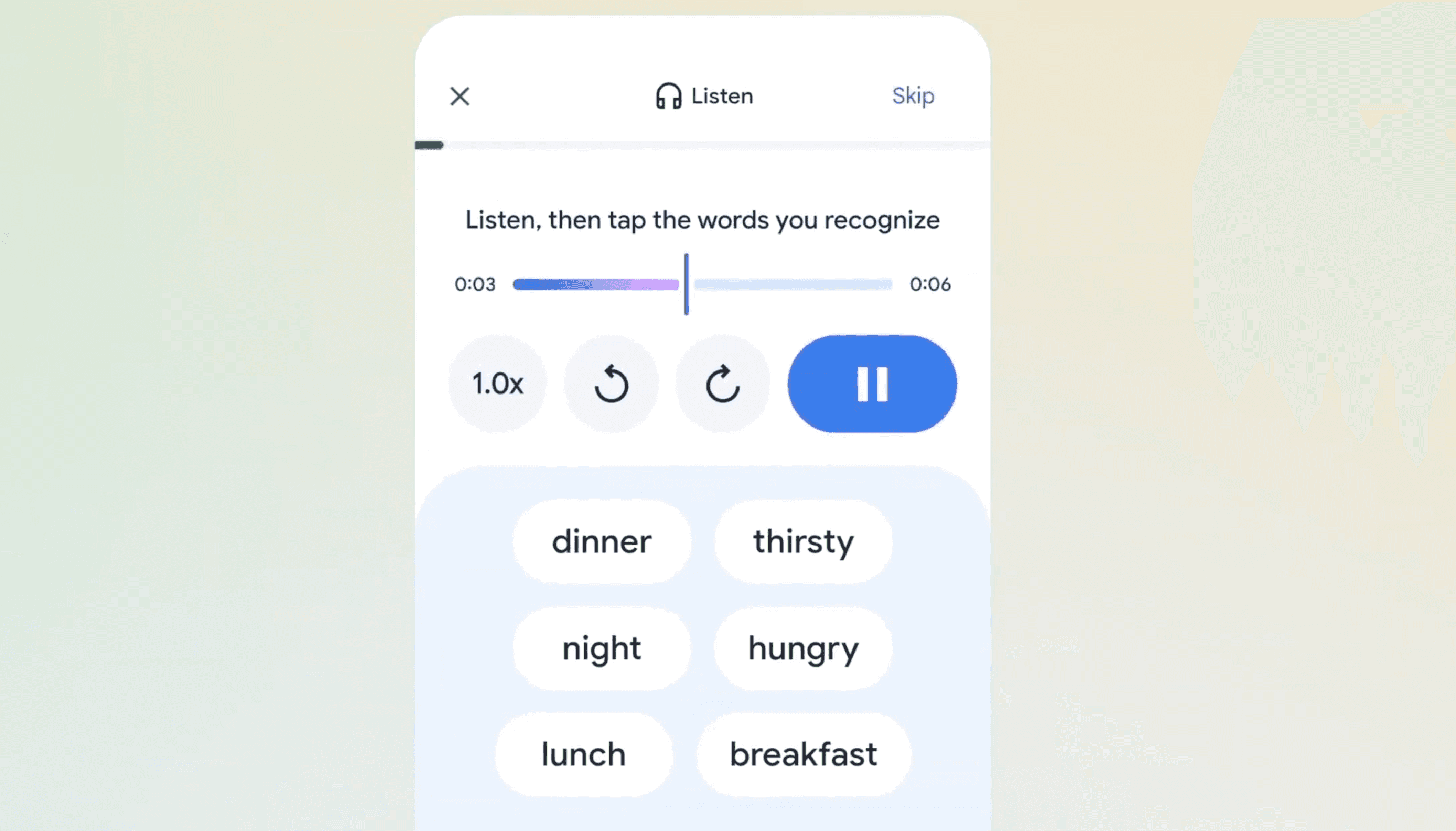

The technical capabilities showcase latest advances in diffusion models. Users can now blend multiple photos seamlessly—combining a selfie with a pet photo to create a basketball court scene, for example. The multi-turn editing feature allows iterative refinement, letting users paint walls, add furniture, and adjust lighting in sequence while preserving previous changes.