Nvidia just cracked the code on teaching AI common sense. The chip giant's new Cosmos Reason model topped Hugging Face's physical reasoning leaderboard by learning basic physics through human-curated question-and-answer pairs. This breakthrough addresses AI's blind spot: understanding that birds can't fly backwards and ice melts into water — knowledge humans take for granted but machines must be explicitly taught.

Nvidia is rewriting the playbook for AI reasoning, and the results are already showing up on leaderboards. The company's Cosmos Reason model just claimed the top spot on Hugging Face's physical reasoning benchmark, marking a significant milestone in teaching machines the kind of common sense humans develop naturally through real-world experience.

The breakthrough centers on a deceptively simple problem: while AI models excel at processing vast amounts of information, they struggle with basic physical understanding. They don't intuitively grasp that mirrors reflect light, ice transforms into water when heated, or that objects fall downward rather than upward. For humans, this knowledge feels automatic — the product of countless real-world interactions from childhood onward.

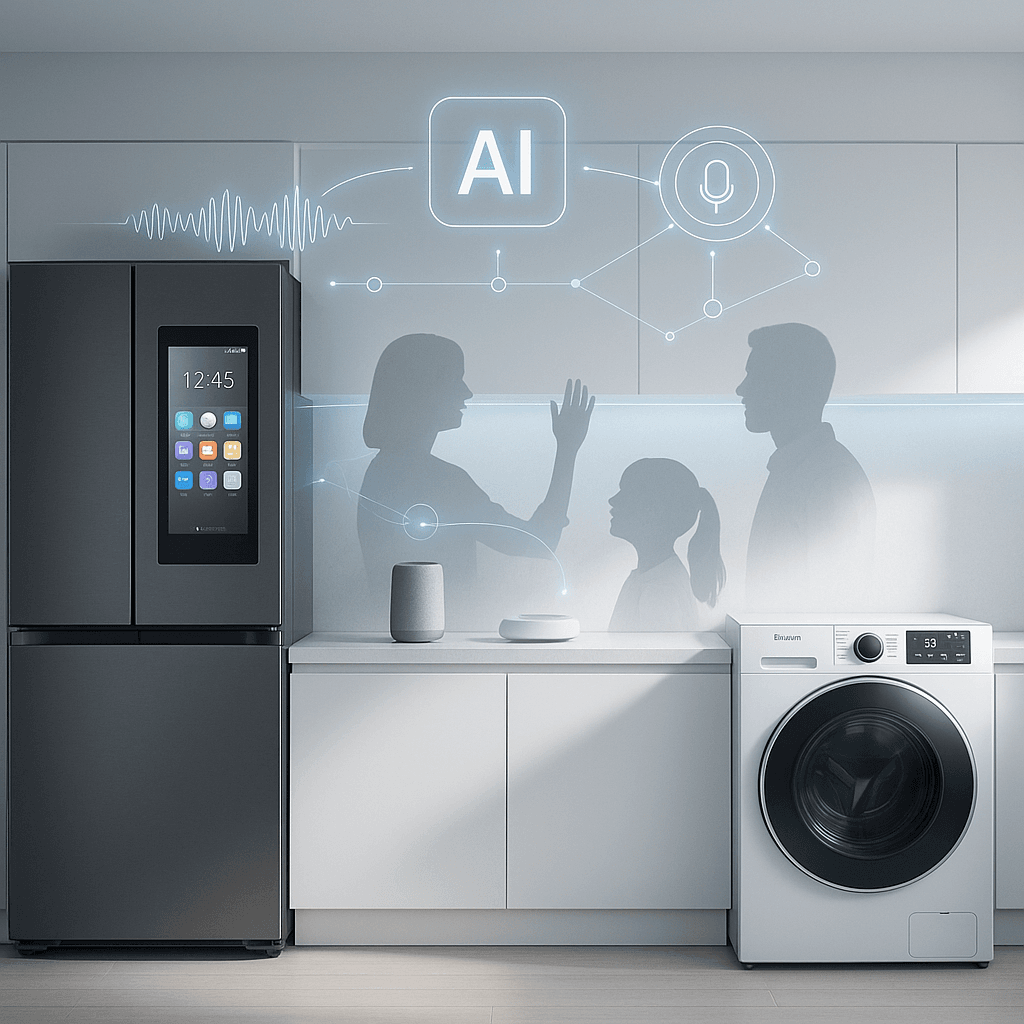

"Without basic knowledge about the physical world, a robot may fall down or accidentally break something, causing danger to the surrounding people and environment," explains Yin Cui, a Cosmos Reason research scientist at Nvidia. This isn't just an academic concern — it's a critical safety issue as AI systems increasingly operate in physical environments from factory floors to public roads.

Nvidia's solution involves what they call a "data factory" — a global team of analysts from diverse backgrounds including bioengineering, business, and linguistics. These human experts create the foundation for machine understanding by developing hundreds of thousands of question-and-answer pairs based on real-world video footage. The process resembles creating a massive, specialized exam for AI systems.

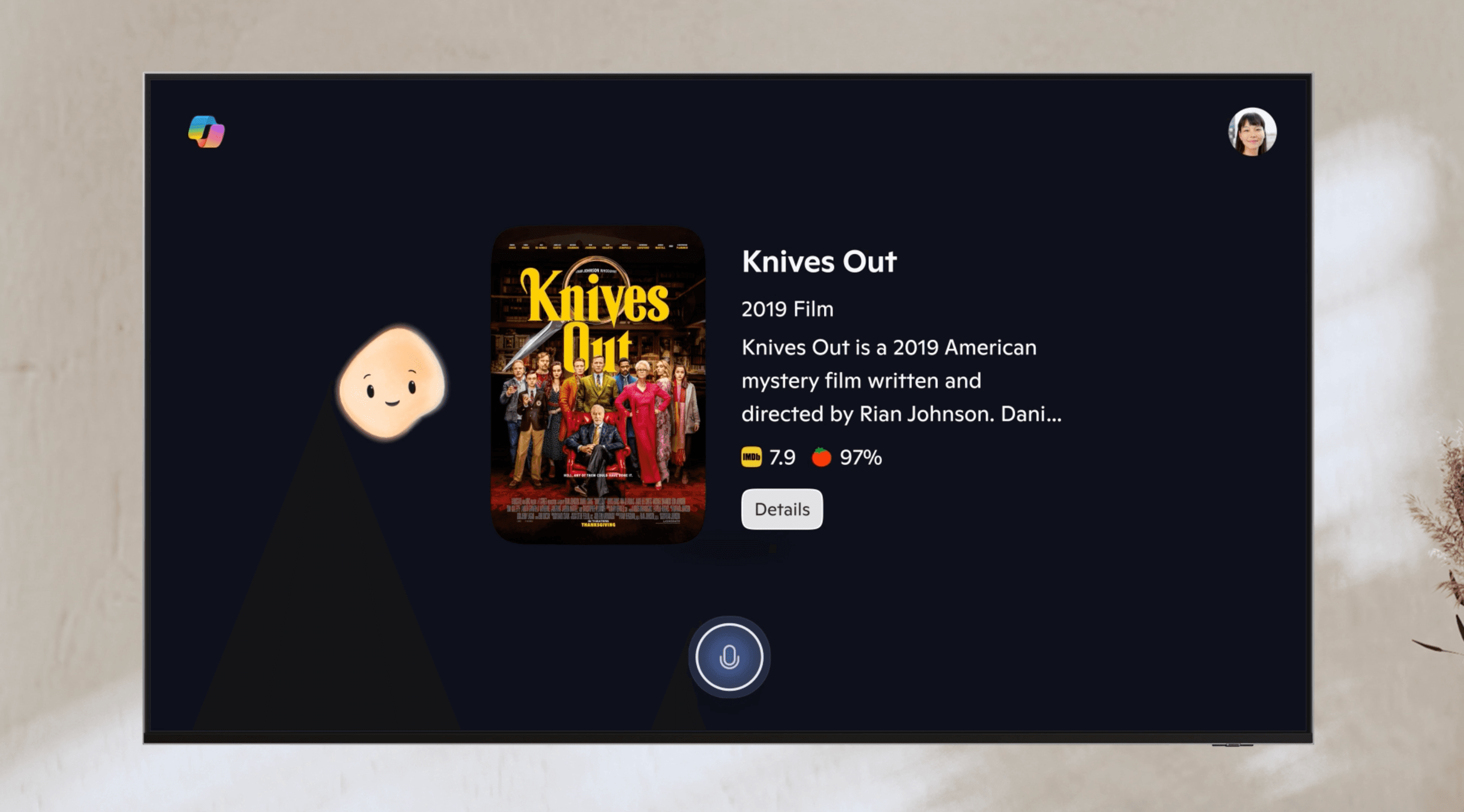

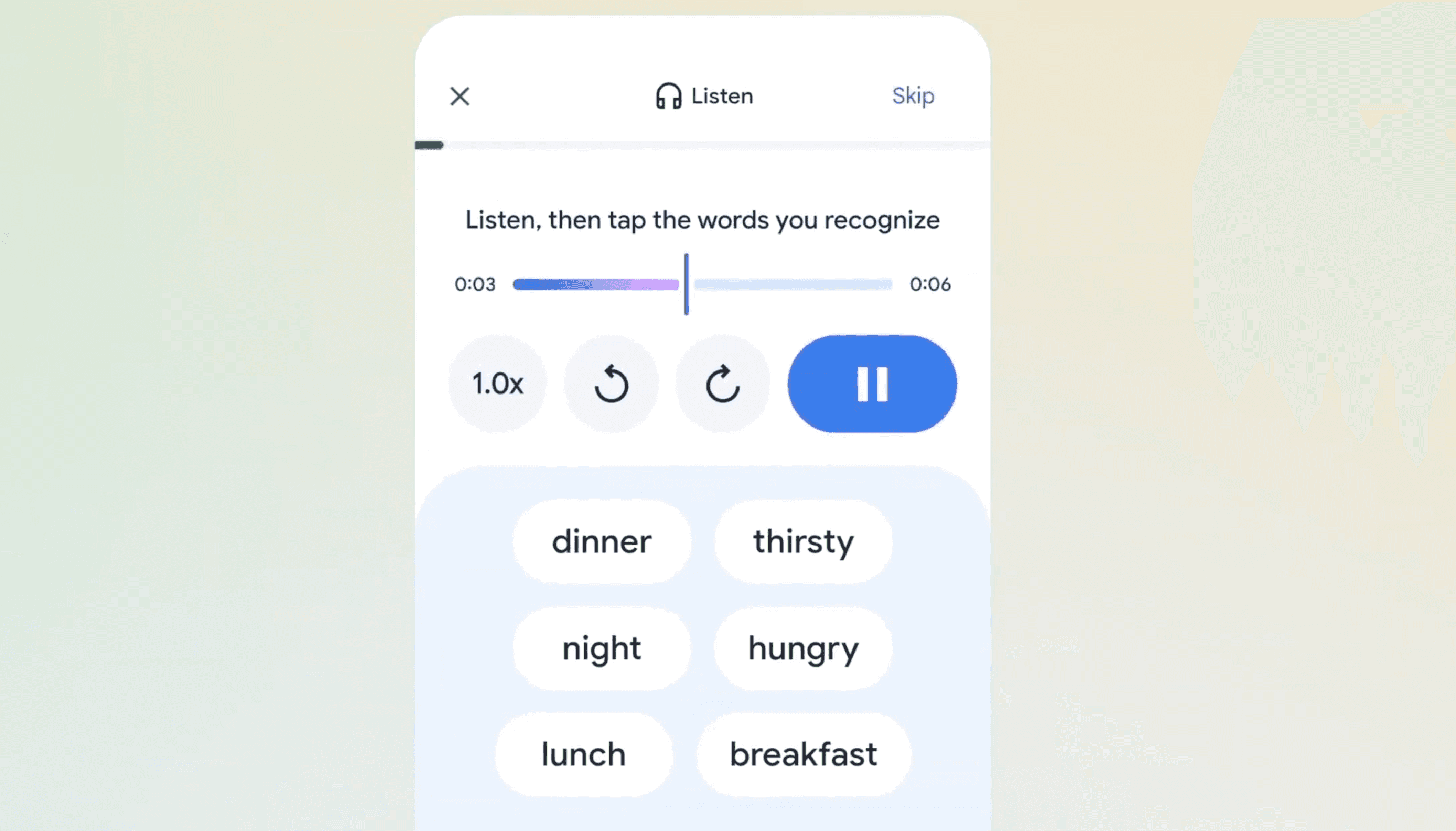

The curation process starts with annotators analyzing everyday scenarios captured on video — chickens walking in a coop, cars navigating rural roads, people preparing food. From a video of someone cutting spaghetti, an annotator might ask: "The person uses which hand to cut the spaghetti?" The team then develops four multiple-choice answers, forcing the AI model to reason through spatial relationships and cause-and-effect scenarios.

"We're basically coming up with a test for the model," . "All of our questions are multiple choice, like what students would see on a school exam." But unlike human students, AI models must learn these concepts from scratch through reinforcement learning rather than intuitive understanding.