Adobe just cracked open Photoshop's AI toolbox to outside models, letting creators tap Google's Gemini 2.5 Flash alongside Adobe's own Firefly for image generation. The move, announced at Adobe Max 2024, signals a major shift toward AI model flexibility while rolling out conversational editing assistants that could change how millions work with photos and videos.

Adobe's annual Max conference just delivered the biggest shakeup to creative software in years. For the first time, Photoshop is opening its Generative Fill feature to third-party AI models, breaking Adobe's traditional walled garden approach that's defined Creative Cloud for over a decade.

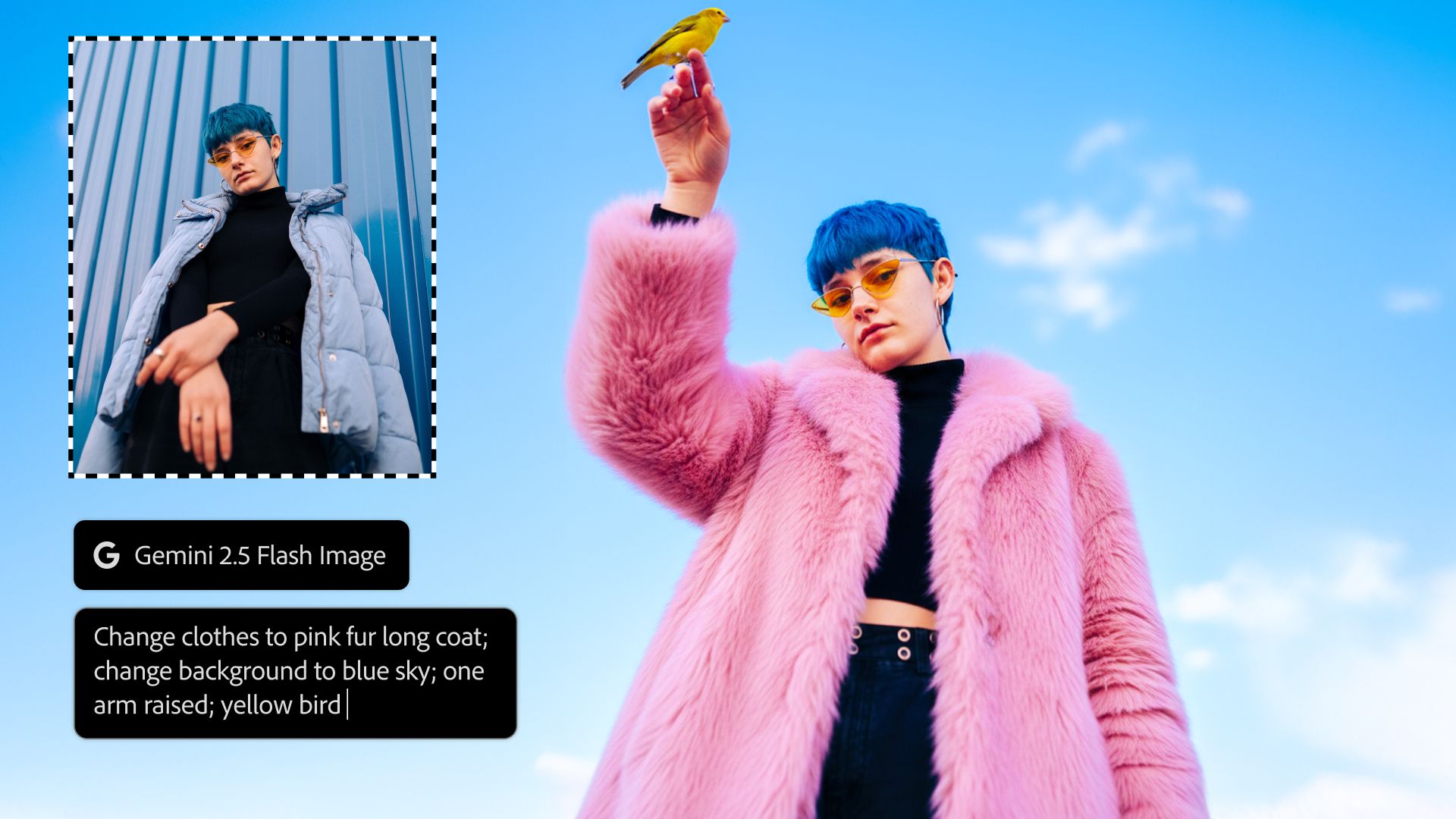

The integration lets users switch between Google's Gemini 2.5 Flash, Black Forest Labs' Flux.1 Kontext, and Adobe's own Firefly models right within Photoshop. After selecting an area and typing a prompt - say "replace this car with a bicycle" - creators can now compare results across multiple AI engines before choosing their favorite. It's a move that acknowledges what many professionals have been saying: no single AI model dominates every creative task.

But Adobe isn't just opening doors to competitors. The company's rolling out an AI Assistant for Photoshop web that feels like having a junior designer at your beck and call. Tell it "make the sky more dramatic" or "remove the person in the background," and it automatically navigates Photoshop's maze of tools to execute your vision. The feature, teased back in April, enters private beta alongside a similar tool for Adobe Express.

The timing couldn't be better for professional photographers drowning in image management. Adobe Lightroom's new "Assisted Culling" feature promises to sort through thousands of shots, automatically flagging the sharpest images with the best composition. For wedding photographers or photojournalists managing massive shoots, this could save hours of manual selection work.

Under the hood, Adobe's upgrading its Firefly Image 5 model with native 4-megapixel generation - no more upscaling artifacts that plagued earlier versions. The company claims significant improvements in rendering realistic humans, addressing one of generative AI's persistent weak spots. The model also powers new prompt-based editing features and a Layered Image Editing tool that understands context - move an object, and shadows automatically adjust to match the new lighting.

Video editors aren't left out of Adobe's AI push. Premiere Pro's getting an AI Object Mask tool in public beta that automatically isolates people and objects from backgrounds across video frames. Instead of painstakingly drawing masks with the pen tool - a process that can take hours for complex scenes - editors can let AI handle the heavy lifting while they focus on color grading and effects.