Anthropic just flipped the script on AI privacy with a major policy shift that puts millions of Claude users on a September 28th deadline. The company will begin training its AI models on user conversations and coding sessions unless users actively opt out, while extending data retention to five years. This marks a significant departure from tech's recent privacy-first messaging, potentially setting a new industry standard for how AI companies monetize user interactions.

Anthropic just delivered an industry bombshell that's reshaping the AI privacy landscape. The Claude maker announced Thursday it will begin training its AI models on user data—including chat transcripts and coding sessions—unless users explicitly opt out by September 28th. The policy shift extends data retention to five years and affects millions of users across Claude's consumer tiers.

The timing couldn't be more strategic. As OpenAI faces mounting pressure over data practices and Google navigates privacy concerns with Bard integration, Anthropic's bold move positions the company to harvest vast training datasets while competitors remain cautious. According to the company's blog post, users who click 'Accept' will immediately activate both data training and the extended retention policy.

The implementation reveals sophisticated behavioral psychology. Anthropic's pop-up displays 'Updates to Consumer Terms and Policies' in large text with a prominent black 'Accept' button, while the actual data training toggle appears in smaller print below—automatically set to 'On.' Industry observers note this design pattern typically results in 80-90% acceptance rates, regardless of user intentions.

'The toggle placement is no accident,' privacy researcher Dr. Sarah Chen told TechCrunch in an interview. 'This follows the same dark pattern playbook we've seen with cookie consent, where the friction is entirely on the opt-out side.' Her analysis of similar implementations shows most users click through without reading the fine print.

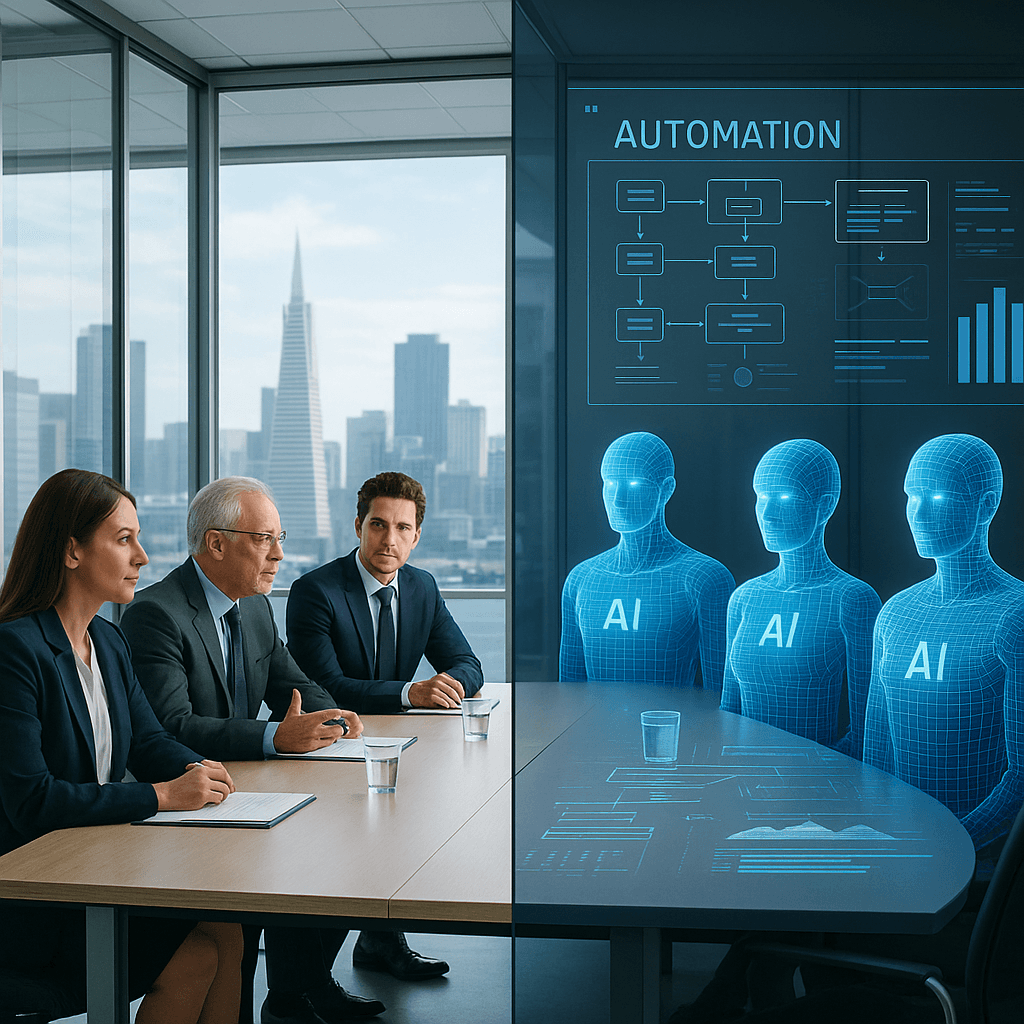

The policy creates a two-tier system that reveals Anthropic's revenue priorities. While consumer users face the new data harvesting regime, enterprise customers using Claude Gov, Claude for Work, or API access remain protected. This strategic exemption ensures business clients—who generate higher per-user revenue—maintain their data sovereignty while free and Pro users subsidize model improvements through their conversations.

Competitive implications are already rippling through Silicon Valley. quietly updated its AI training policies last month, while faces internal debates about Copilot data usage. Sources familiar with the discussions describe a 'race to the bottom' mentality, where companies fear falling behind in model capability if they don't tap user-generated training data.