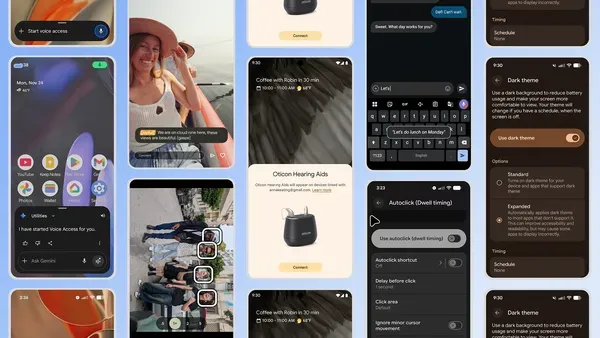

Google just unveiled seven major accessibility upgrades for Android, leveraging AI to make smartphones more inclusive for users with disabilities. The rollout coincides with International Day of Persons with Disabilities and includes features like automatic dark theme expansion, emotion-detecting captions, and hands-free Voice Access activation through Gemini.

Google is transforming Android accessibility with a comprehensive suite of AI-powered features that roll out this December. The timing isn't coincidental - these updates launch ahead of International Day of Persons with Disabilities, representing Google's most significant accessibility push since launching TalkBack.

The most immediately noticeable change comes through an expanded dark theme option in Android 16. Unlike previous versions that relied on individual apps supporting dark mode, this system-level feature automatically darkens most apps on your device, even those without native dark theme support. "We've heard how frustrating it is to switch from a dark app to a glaring light one," explains Julie Cattiau, Product Manager for Android Accessibility at Google.

This addresses a real pain point for the estimated 285 million people worldwide living with vision impairments, according to WHO data. The feature creates visual consistency across the entire Android experience, particularly benefiting users with low vision or light sensitivity conditions.

But Google's bigger bet lies in AI-enhanced communication features. Expressive Captions, which already used machine learning to detect tone and environmental sounds, now identifies and tags emotional context in speech. Users will see captions marked with emotions like [joy] or [sadness], providing crucial contextual information often lost in traditional transcription.

The feature extends beyond Android devices. Google is bringing Expressive Captions capabilities to YouTube across all platforms, automatically displaying speech intensity in caps and environmental sounds for English videos uploaded after October. This represents a significant expansion of accessibility features from a mobile-first approach to Google's entire ecosystem.