Latin America is building its own ChatGPT rival. CENIA, Chile's National Center for Artificial Intelligence, just unveiled Latam-GPT — a 50-billion parameter language model trained exclusively on Latin American languages, dialects, and cultural contexts. The open-source project launches this year with backing from 33 regional institutions and represents the region's boldest challenge yet to US AI dominance.

The AI revolution just got a Latin American accent. CENIA, Chile's nonprofit AI research center, is preparing to launch Latam-GPT — a massive language model designed specifically for Latin America and the Caribbean — challenging the dominance of OpenAI, Google, and other tech giants in the region.

"We're not looking to compete with OpenAI, DeepSeek, or Google," CENIA director Álvaro Soto told WIRED en Español. "We want a model specific to Latin America and the Caribbean, aware of the cultural requirements and challenges that this entails, such as understanding different dialects, the region's history, and unique cultural aspects."

The ambitious project reflects growing concerns about AI colonialism — the idea that US-trained models impose American cultural biases on the rest of the world. When Soto asks existing models about Latin American education, "it would probably tell you about George Washington," he explains. "We should be concerned about our own needs; we cannot wait for others to find the time to ask us what we need."

Latam-GPT boasts impressive technical specifications that put it on par with OpenAI's GPT-3.5. The model features 50 billion parameters and has been trained on over 8 terabytes of regional text data — equivalent to millions of books. This massive corpus combines information from 20 Latin American countries plus Spain, totaling 2.6 million documents.

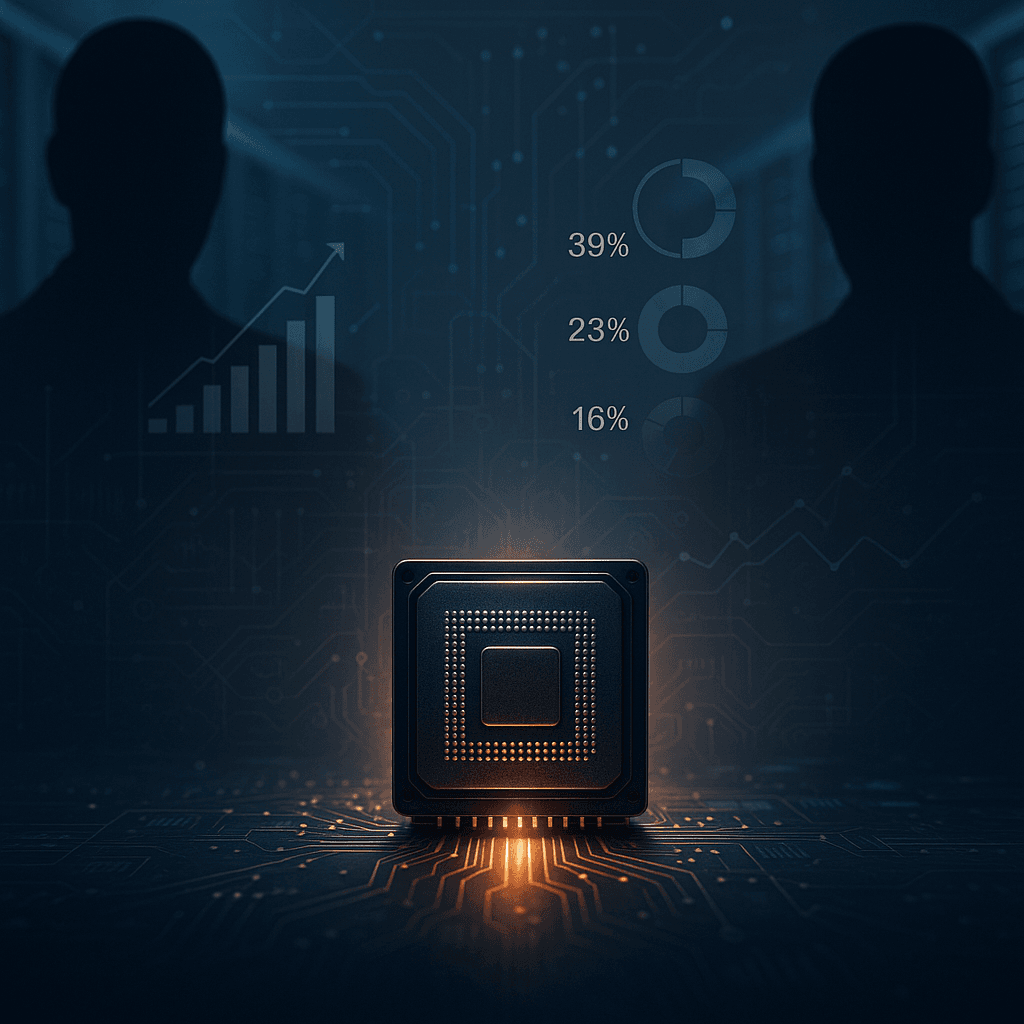

The data distribution reveals the project's collaborative scope: Brazil leads with 685,000 documents, followed by Mexico (385,000), Spain (325,000), Colombia (220,000), and Argentina (210,000). The numbers reflect each country's digital development and content availability, but the team actively seeks balanced representation. "If we notice that Nicaragua is underrepresented in the data, for example, we'll actively seek out collaborators there," Soto notes.

Underpinning this effort is Latin America's most powerful AI supercomputer. The University of Tarapacá in Arica, Chile, recently installed a $10 million computing cluster featuring 12 nodes, each equipped with eight state-of-the-art H200 GPUs. This 96-GPU powerhouse represents unprecedented computing capacity for the region and enables large-scale model training for the first time in Latin America.