Black Forest Labs just unleashed FLUX.2, a powerful family of image generation models that's making waves in the AI art community. The 32-billion-parameter models deliver photorealistic 4K images with multi-reference capabilities, but here's the kicker - NVIDIA collaborated to slash VRAM requirements by 40% through FP8 optimization.

Black Forest Labs is shaking up the AI art world with FLUX.2, a breakthrough image generation model that's already sending ripples through creative communities. The company released this 32-billion-parameter powerhouse today, promising to eliminate the telltale 'AI look' that's plagued generated images for years.

What sets FLUX.2 apart isn't just its photorealistic output - it's the engineering partnership with NVIDIA that makes these models actually usable. Without optimization, FLUX.2 demands a staggering 90GB of VRAM, putting it way beyond reach of typical consumer hardware. Even the lowVRAM mode still required 64GB, essentially locking out anyone without enterprise-grade equipment.

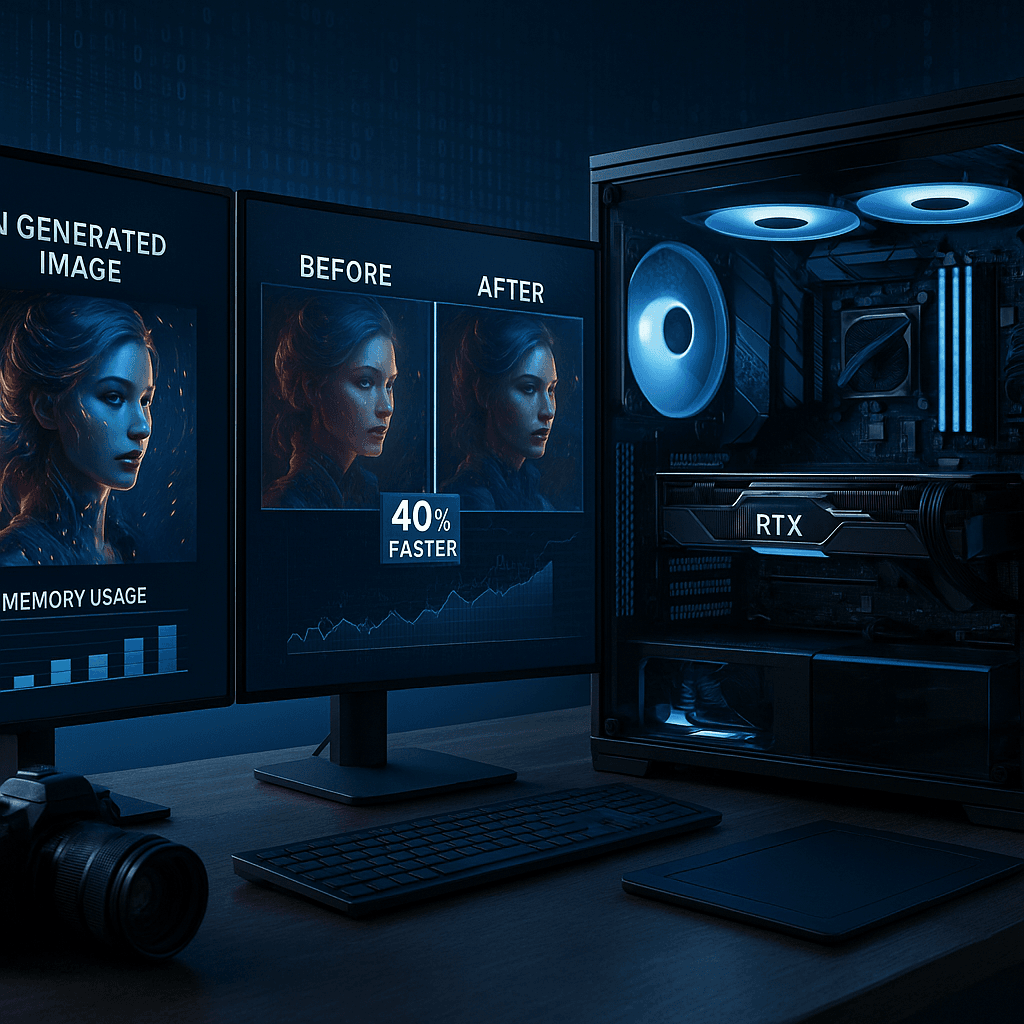

That's where NVIDIA's collaboration gets interesting. The two companies worked together to quantize FLUX.2 to FP8 precision, slashing VRAM requirements by 40% while maintaining comparable quality. According to NVIDIA's blog post, this optimization also delivers a 40% performance boost on RTX GPUs.

The technical improvements go deeper than just memory efficiency. FLUX.2 generates images up to 4 megapixels with what Black Forest Labs calls 'real-world lighting and physics.' The models support direct pose control, letting artists specify exact character positioning without the usual prompt gymnastics. But the standout feature might be multi-reference capability - users can feed up to six reference images to maintain consistent style or subjects across generations.

ComfyUI, the popular open-source interface for AI image generation, played a crucial role in making FLUX.2 accessible. NVIDIA partnered with ComfyUI developers to enhance the platform's weight streaming feature, which offloads model parts to system memory when GPU memory runs low. While this creates some performance overhead, it opens the door for RTX 4080 and 4090 users to actually run these models locally.

The timing couldn't be better for NVIDIA's RTX ecosystem. As AI image generation moves from cloud services to local hardware, having optimized models like FLUX.2 gives RTX users a significant advantage. The FP8 quantization isn't just about fitting models into smaller memory footprints - it's about making cutting-edge AI accessible to prosumers and indie creators who can't afford data center hardware.