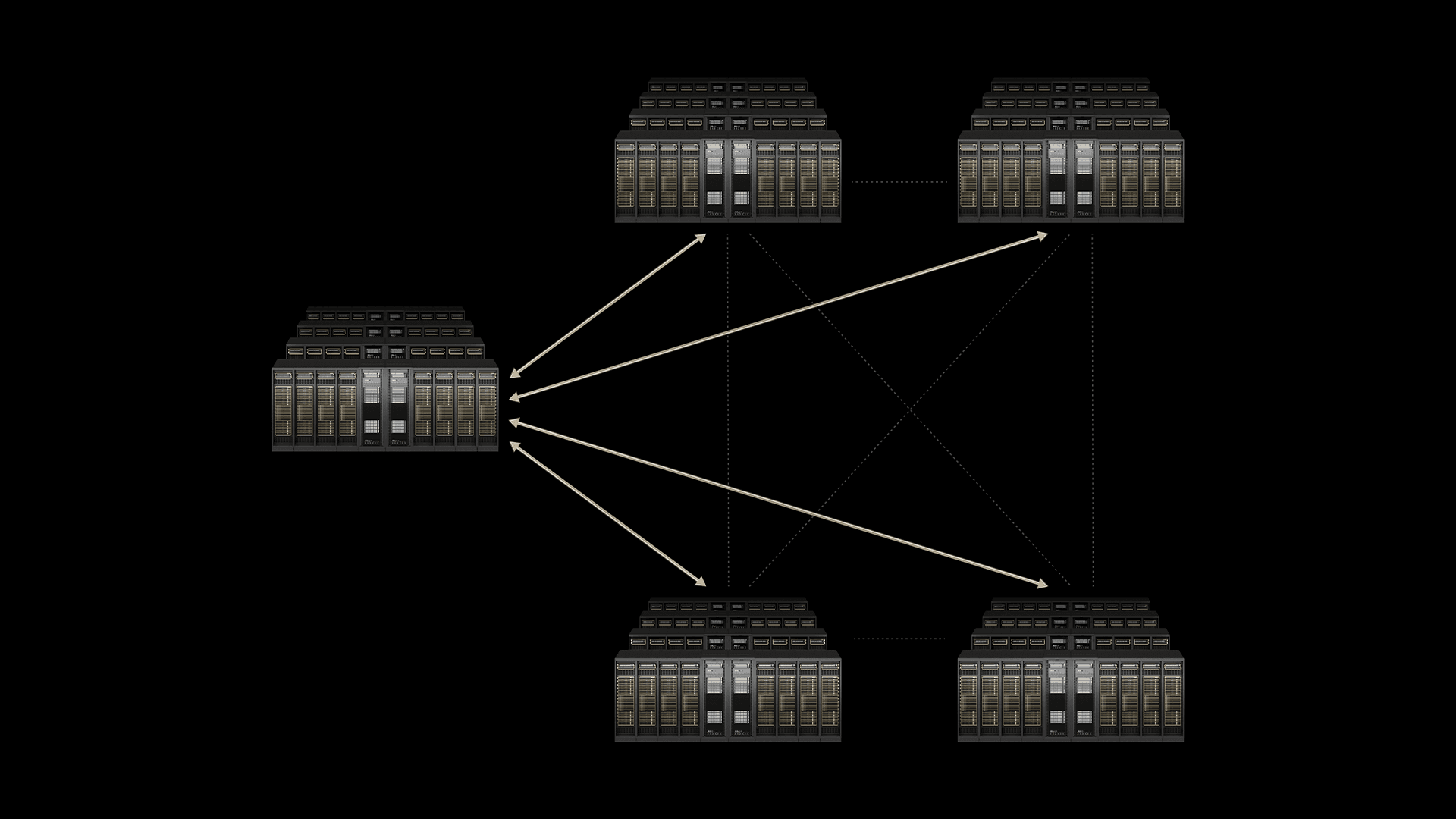

NVIDIA just unveiled Spectrum-XGS Ethernet, a breakthrough networking technology that connects geographically distributed data centers into unified "giga-scale AI super-factories." The announcement at Hot Chips signals a fundamental shift in how AI infrastructure scales beyond single facilities, with CoreWeave among the first adopters planning to link their global data centers into one massive supercomputer.

NVIDIA just redefined the future of AI infrastructure with a bold bet on distributed computing. The company's new Spectrum-XGS Ethernet technology promises to solve one of the industry's most pressing challenges: what happens when individual data centers max out their power and space capacity but AI workloads keep demanding more scale.

The timing couldn't be more critical. As AI models balloon in size and complexity, hyperscale operators are bumping against the physical limits of what a single facility can handle. Traditional Ethernet networking simply wasn't designed for the ultra-low latency and predictable performance required to treat multiple data centers as one unified compute resource.

Spectrum-XGS Ethernet changes that equation entirely. The technology extends NVIDIA's existing Spectrum-X platform with what the company calls "scale-across" capabilities - a third pillar of AI computing beyond the familiar scale-up and scale-out approaches. According to NVIDIA's press release, the system nearly doubles the performance of the NVIDIA Collective Communications Library, the software that orchestrates multi-GPU communication across AI clusters.

"The AI industrial revolution is here, and giant-scale AI factories are the essential infrastructure," NVIDIA founder and CEO Jensen Huang said in the announcement. "With NVIDIA Spectrum-XGS Ethernet, we add scale-across to scale-up and scale-out capabilities to link data centers across cities, nations and continents into vast, giga-scale AI super-factories."

The technical breakthrough lies in advanced algorithms that dynamically adapt network behavior based on the physical distance between facilities. Auto-adjusted distance congestion control, precision latency management, and end-to-end telemetry work together to deliver predictable performance even when AI workloads span continents. This isn't just theoretical - CoreWeave, the AI-focused cloud provider, is already planning to deploy Spectrum-XGS across its global infrastructure.