TL;DR

- - Scrutinize data visualizations before public releases

- - Incorrect graphs undermined OpenAI at GPT-5 launch

- - Shows potential pitfalls of rapid presentation in tech

- - Maintain rigorous internal checks to uphold credibility

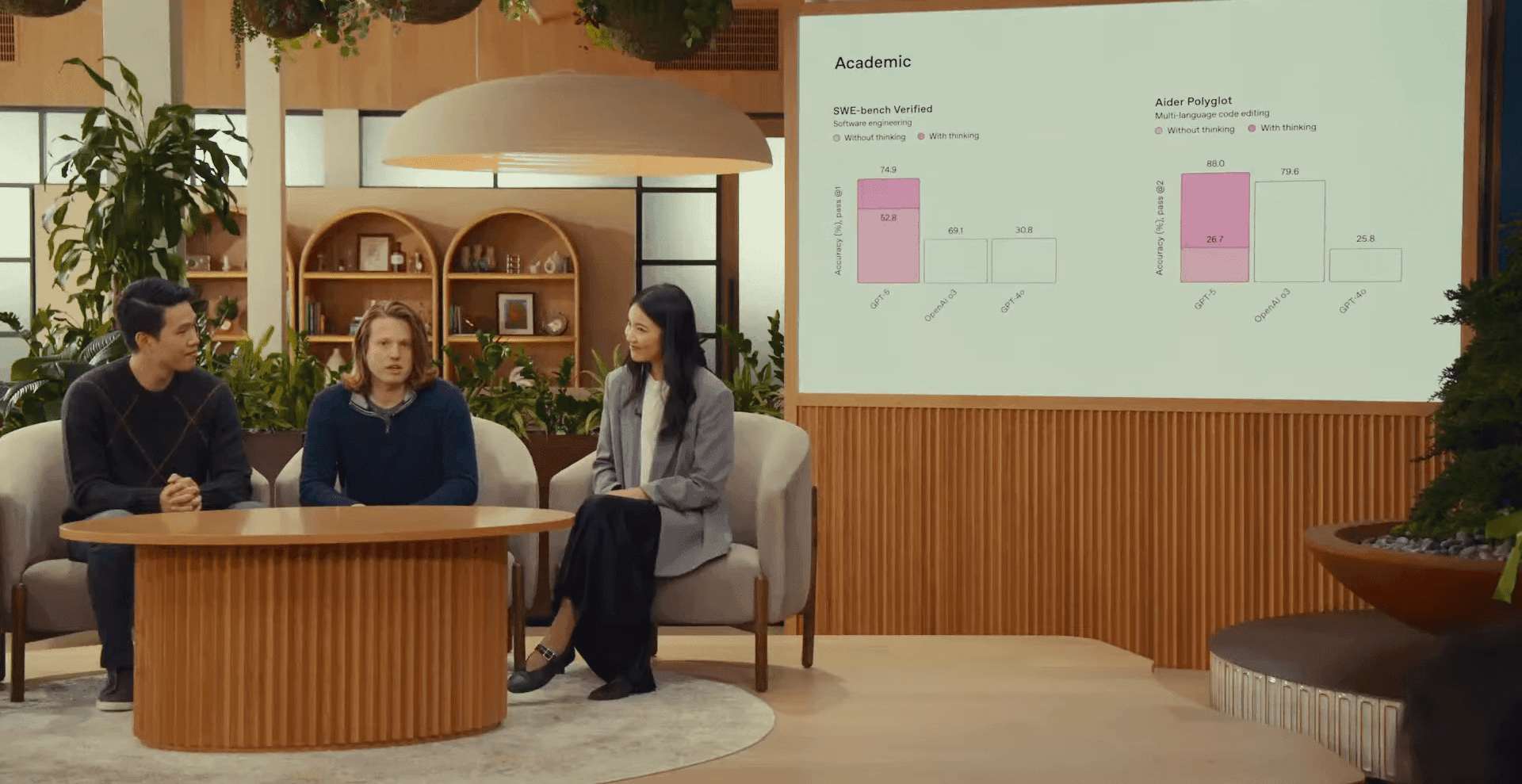

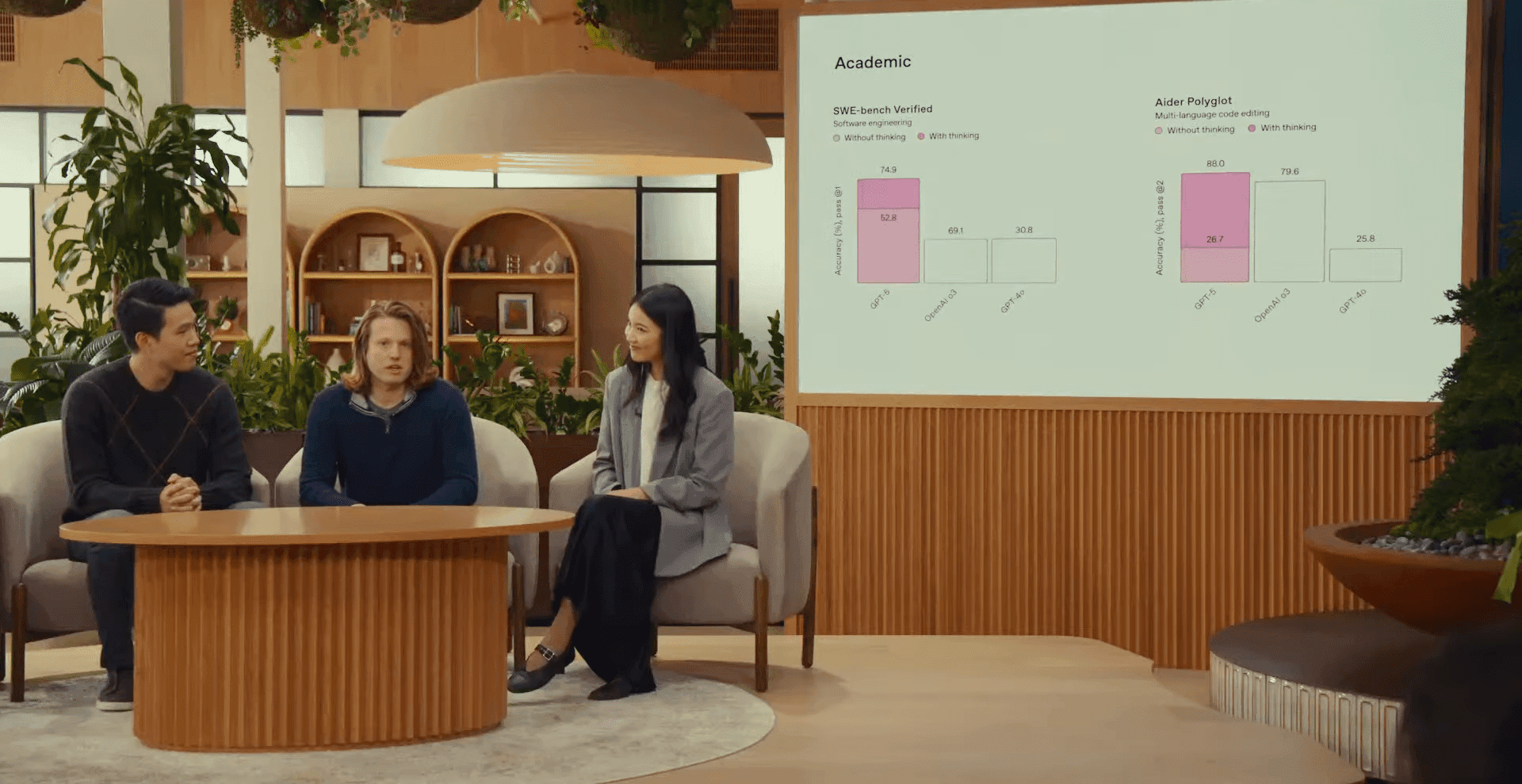

OpenAI's latest GPT-5 presentation aimed to underline the advancements of their newest AI model, particularly in handling deceptive information. However, glaring errors in the graphs showcased during their livestream tarnished the narrative, raising questions about data integrity. In an age where data visualizations are supposed to strengthen arguments, what can tech leaders learn from OpenAI's public misstep?

Opening Analysis

Errors in data visualizations, especially during high-stakes presentations like OpenAI’s GPT-5 launch, reveal the fragility of relying on visual data without thorough vetting. During the event, mismatched scales and discrepancies in bar sizes—such as a 50% rate of coding deception shown as smaller than 47.4%—diverted attention from the model's capabilities to the presentation's credibility.

Market Dynamics

Within the competitive landscape of AI development, such mishaps can be highly damaging. Despite OpenAI’s notoriety in the field, a misstep at this scale gives competitors an opportunity to capitalize on the public’s trust issues. Brands like Google and Anthropic continue to watch closely.

Technical Innovation

The incident underscores not a failure of technical capability but rather the presentation's execution. GPT-5 still reflects advancements in decreasing hallucinations and enhancing output fidelity, focusing now on how these capabilities must be communicated transparently.

Financial Analysis

For stakeholders, errors of this nature can suggest deeper oversight issues, potentially affecting future funding rounds or partnerships. While OpenAI remains a funding magnet, maintaining trust from investors demands visible accountability.

Strategic Outlook

In the next 3-6 months, OpenAI may prioritize enhancing its public communications and refining its internal testing processes. Long term, entities in AI should recognize the importance of clarity and accuracy, learning from these blunders to mitigate risks in future launches.

Key Takeaways:

- Data accuracy in public forums safeguards credibility.